As voice assistants and voice-activated Internet of Things devices work their way into people's homes, there are plenty of new opportunities for developers and businesses. Creating an app that obeys voice commands and interacts with a third-party voice assistant presents the opportunity to reach a rapidly-growing market of tech-savvy consumers.

But you must proceed with caution. There are significant privacy issues associated with this type of technology. There's a real danger of scaring people away if you aren't totally transparent.

- 1. Are Voice Assistants Spying On Us?

- 2. Developing a Privacy-Conscious Approach

- 3. Understanding Your Obligations

- 3.1. Privacy Law

- 3.1.1. United States

- 3.1.2. European Union

- 3.2. Third Parties

- 3.2.1. Amazon (Alexa)

- 3.2.2. Apple (Siri)

- 3.2.3. Google (Google Assistant)

- 3.2.4. Microsoft (Cortana)

- 4. Being Transparent With Your Users

- 4.1. Your Privacy Policy

- 5. Obtaining Your Users' Consent

- 5.1. Apple

- 5.2. Google

- 6. Summary

Let's take a look at how you can find your feet in this developing marketplace while obeying the law and respecting your users' privacy.

Are Voice Assistants Spying On Us?

Voice assistants are sometimes compared to the "Telescreen" from George Orwell's dystopian classic, 1984. The device that sits in the corner of your home, listening to your family and sharing your conversations with some unknown third party.

Indeed, in 2015 Samsung created headlines by warning its customers against discussing personal information in front of its voice-controlled smart TV.

Research by Microsoft from April 2019 suggests that 75 percent of US households will contain a smart speaker by 2020. But this emergent technology is not yet fully understood by members of the public, who often think that their speech is being monitored at all times.

While voice assistants are "always listening" at the local level, they do not transmit any information until they hear the trigger word ("OK Google," "Hey Siri," etc).

Of course, this in itself is sufficient to create a significant privacy risk. Even people who regularly use voice assistant technology are aware of this. Research from Accenture UK suggests that 40 percent of voice assistant users are concerned about "who is listening" and how their data is used.

Developing a Privacy-Conscious Approach

While it's true that some misunderstandings might have led to overblown worries about the privacy implications of voice assistants, there are nonetheless certain precautions that you must take if you're planning to enter this field.

If your company is planning to embrace this technology, you'll need to do the following:

- Understand your legal and contractual obligations

- Be transparent with your users

- Get consent to record and transmit your users' data

We're going to work through how you can implement this three-part strategy and launch a safe and compliant product that makes the most out of voice assistant technology.

Understanding Your Obligations

There are two types of obligations on any business developing a product or service in this area:

- Legal obligations under privacy and data protection law

- Contractual obligations under the Terms and Conditions of third parties (for example, Google or Amazon)

Privacy Law

Privacy law is developing fast but is struggling to keep pace with technology.

Before you decide to use voice assistant technology, it's crucial that you're aware of the laws that will apply to you. And you must consider the laws both of the countries in which your company and your users are based.

Every major economy has some form of privacy law. Let's take a look at the state of privacy law in two of the world's most important markets - the United States and the European Union.

United States

Privacy law in the United States is fairly patchy right now. However, the direction of travel is unarguably towards tighter regulation and greater consumer rights.

California, which has long been a frontrunner in protecting its residents' privacy, has recently passed some of the strictest data protection legislation that the US has ever seen with the California Consumer Privacy Act (CCPA).

Whilst the CCPA isn't directly applicable to most small companies, it should be taken as a sign of things to come. When stricter privacy regulations come into force, your company will have a significant competitive advantage if it is already fundamentally compliant.

It's also important to be aware that the US does have quite a strict privacy law protecting children, the Children's Online Privacy Protection Act (COPPA). It has even been suggested that voice assistant technology itself is in fundamental violation of COPPA.

European Union

The European Union is home to the world's most comprehensive and powerful data protection law, the General Data Protection Regulation (GDPR).

The GDPR covers almost all commercial activity in the EU that involves the processing of people's personal data. The recordings transmitted by voice assistants fall firmly within its scope.

Any company operating in the EU (whether based there or not) will need to think very carefully about why and how it is using voice assistant technology. Violations of the GDPR can lead to financially crippling penalties. And this is a law that it is easy to violate if you don't take the time to understand how it applies to your business.

Third Parties

Voice assistant companies are in fierce competition. One way in which such companies are expanding is by encouraging third-party developers and manufacturers to create products that integrate with their voice assistant software.

There are two common ways for developers to get on board with this:

- Developing an app that integrates into third-party voice assistant software. For example, you might be developing an app with features that can be directly accessed via Google Assistant ("OK Google, where can I get a dinner reservation nearby?")

- Creating a device that integrates third-party voice assistant software. For example, you might be engineering a smart home appliance that can be controlled via Apple's Siri ("Siri, turn the kettle on")

While voice assistant software providers are eager to get third parties involved, they must ensure that these third parties adhere to high standards of privacy.

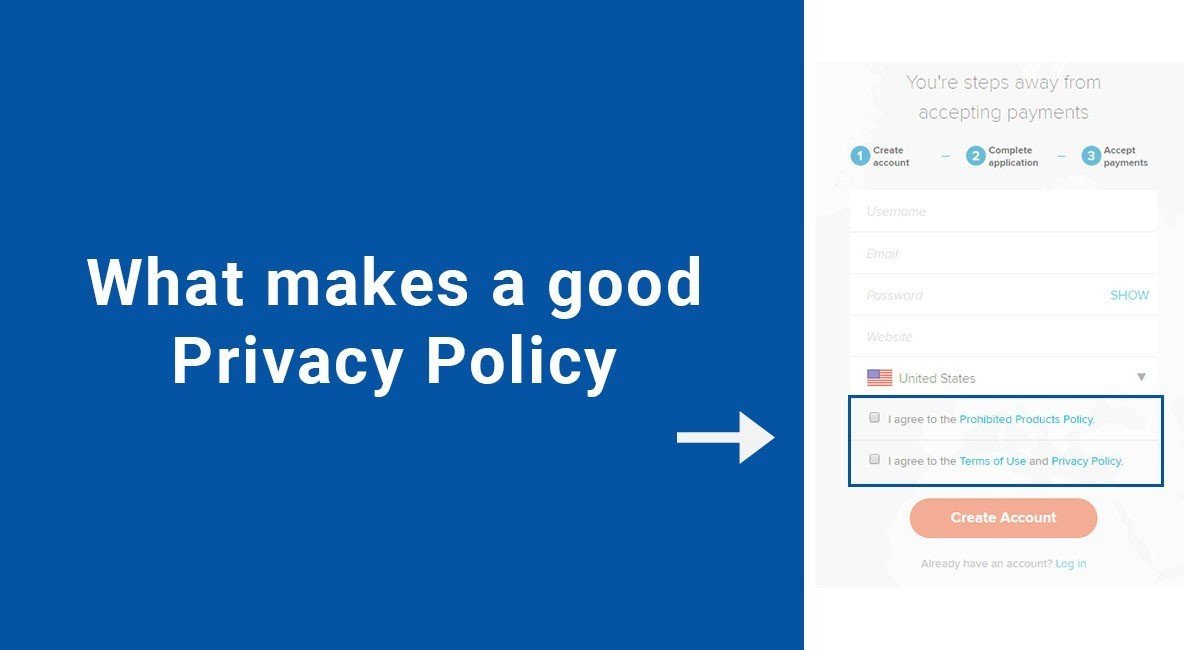

Even a mobile app that collects the most basic of personal information will require a Privacy Policy. This is part of the Terms and Conditions with which developers must agree before their content can appear on platforms such as Google's Play Store and Apple's App Store.

And if your product or service has significant privacy implications then you are under an even greater obligation to be careful and transparent.

Amazon (Alexa)

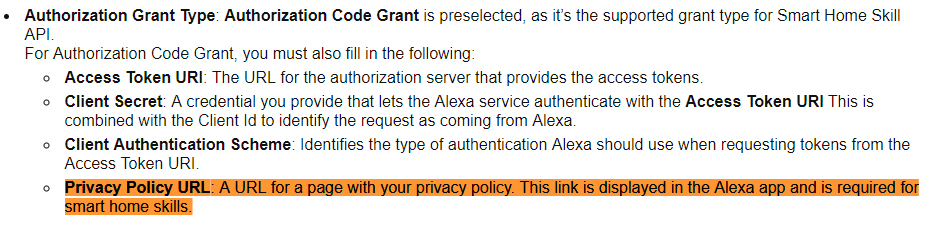

Developers of Amazon Alexa Skills (apps capable of interacting with Alexa) are required to create a Privacy Policy and submit it to Amazon.

Amazon also provides its Smart Home Skill API so developers of smart home devices can enable them to be controlled via Alexa. This, too, requires a Privacy Policy, as explained in Amazon's article on Creating Your First Smart Home Skill:

Amazon goes to considerable lengths to reassure its customers that using Alexa will not violate their privacy, for example in its Alexa, Echo Devices and Your Privacy FAQ. Alexa Skills developers play an essential role in promoting privacy.

Apple (Siri)

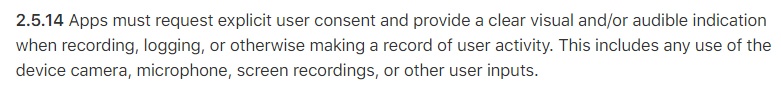

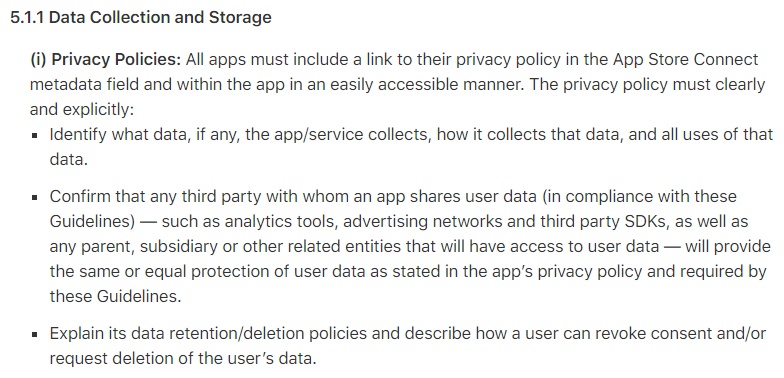

Apple is particularly strict with regard to user privacy across all of its products. Here are some excerpts from the App Store Review Guidelines that are relevant to using voice commands within an iOS app.

If you want your app to use voice commands, you'll need your users' permission before you can access their device's microphone:

All iOS apps hosted in the App Store must be accompanied by a Privacy Policy, and this must be accessible from within the app itself:

Apple's reputation for respecting customer privacy depends on third-party developers obeying its rules.

Google (Google Assistant)

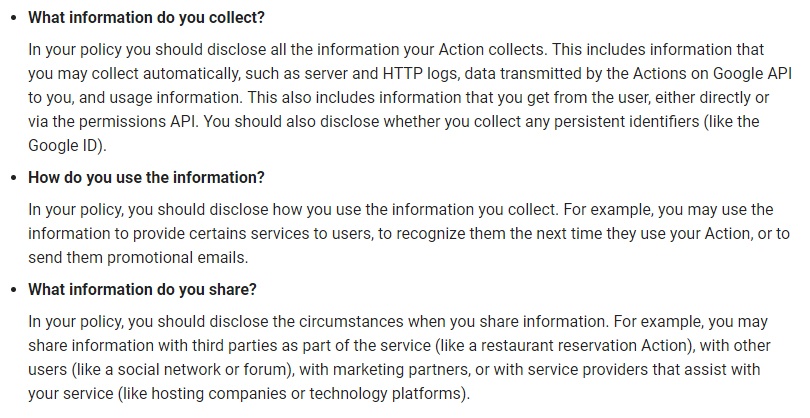

Developers can integrate Google Assistant into their apps or devices by developing an Action via Actions on Google. Google policy requires you to maintain a Privacy Policy which "comprehensively and accurately disclose[s] all of your privacy practices."

Here's some of the basic information that a Privacy Policy must contain, according to the Actions on Google Privacy Policy guidance:

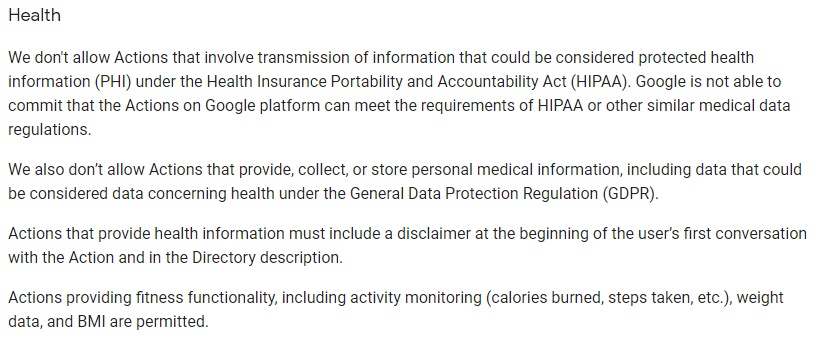

It's also important to note that collecting health data via Actions on Google is forbidden:

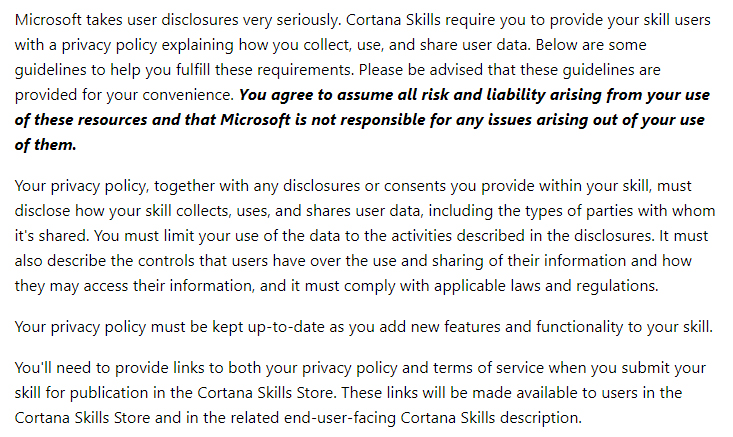

Microsoft (Cortana)

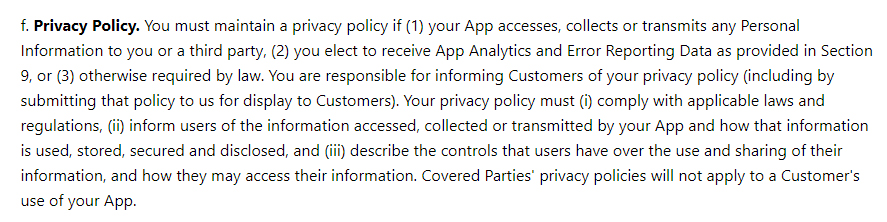

Microsoft's App Developer Terms requires all developers to supply a Privacy Policy before it will host their app in its store:

And Microsoft's Privacy Policy Guidelines specifically require developers of Cortana Skills to provide a Privacy Policy:

Being Transparent With Your Users

Companies like Amazon and Google supposedly put forth great efforts to anonymize the data they receive through their voice assistant software. Besides which, you might be wondering whether audio short clips of people asking Alexa to turn on the radio or using Siri to set an alarm can really constitute personal information.

However, there is little doubt that the information collected by apps and devices that integrate voice assistants can be highly personal.

In 2018, an Amazon user made a request for all the personal information Amazon held on him. Amazon erroneously sent this user 1,700 audio files collected from a different user via Alexa, including Spotify commands and public transport inquiries. The recipient sent the files to the German magazine C't, who were easily able to work out the mystery user's identity - and that of his girlfriend.

Privacy law is concerned with exactly this sort of problem. While your company won't be responsible for storing data provided to voice assistants with which your app interacts, it's important that your users are told what will happen to the data processed via your product.

Your Privacy Policy

As we've seen, a Privacy Policy is required both by law and under the Terms and Conditions of third party companies.

Your Privacy Policy should tell your users (and anyone else who wants to know) how and why your company uses personal information.

The exact contents of your Privacy Policy will depend on which laws you're complying with, the third-party voice assistant software providers you're working with, and the nature of your business.

Commonly, a Privacy Policy will include information about:

- What personal information you collect

- How and why you collect personal information

- What you do with the personal information you collect

- Who you share personal information with

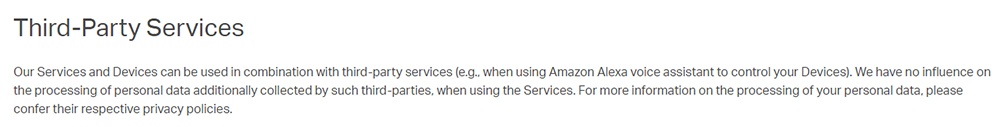

If your product integrates with a third-party voice assistant, then it is collecting personal information and transmitting it to that third party (e.g. Google, Microsoft, etc). It's important to be clear about this.

Here's an example from KasaSmart. The company informs its users that their personal information may be transferred to Amazon when using its Alexa-integrated functions:

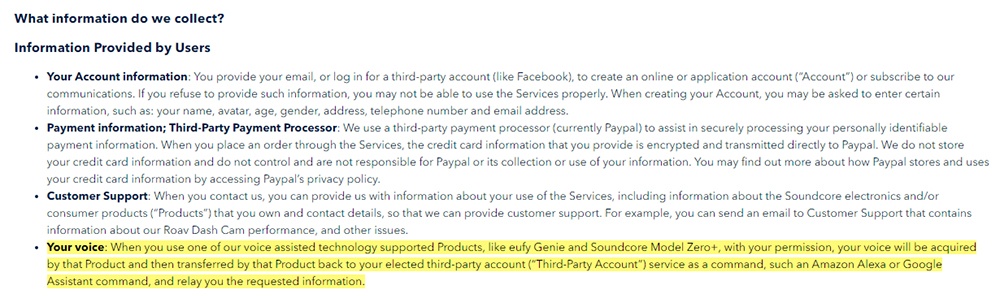

Here's another example from Soundcore, in which the company explains the implications of using its product's voice-activated features:

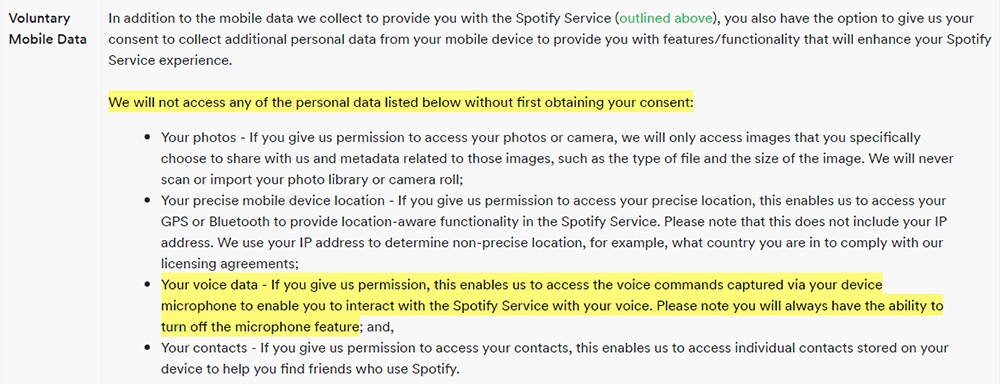

And here's how Spotify explains how it processes voice data provided by its users:

Spotify explains that its use of voice data is contingent on its users' consent. Obtaining your users' consent is an essential part of using a voice assistant, as we'll see below.

- Click on the "Start the Privacy Policy Generator" button.

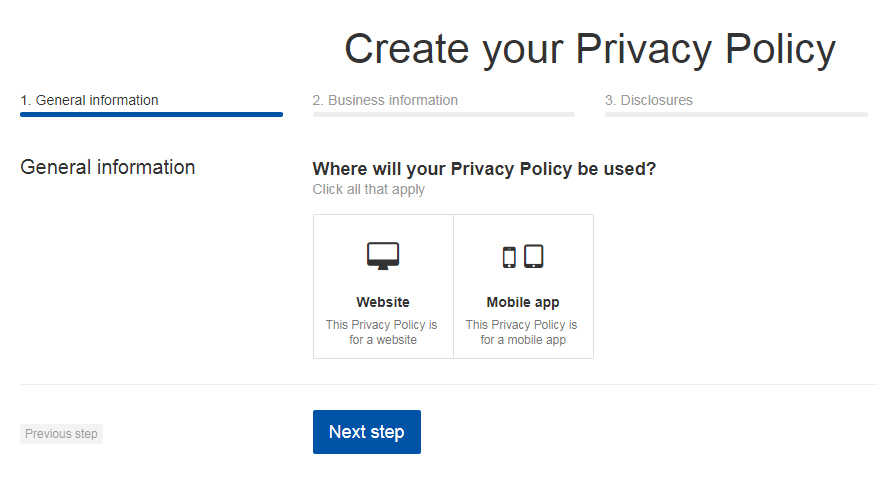

- At Step 1, select the Website option and click "Next step":

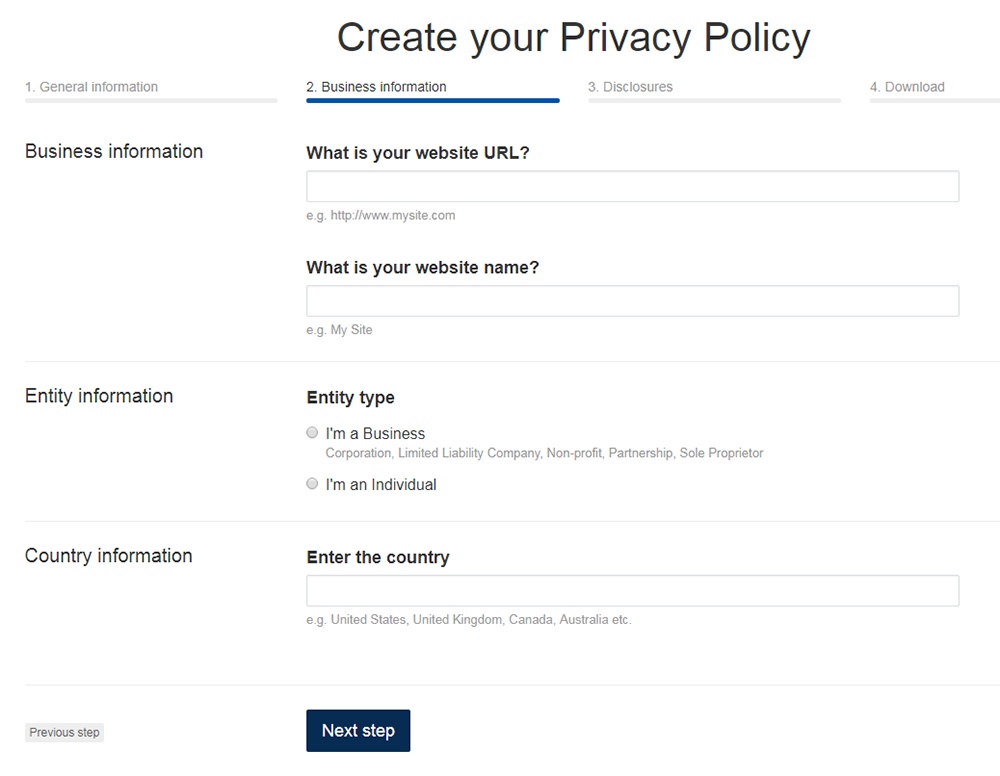

- Answer the questions about your website and click "Next step" when finished:

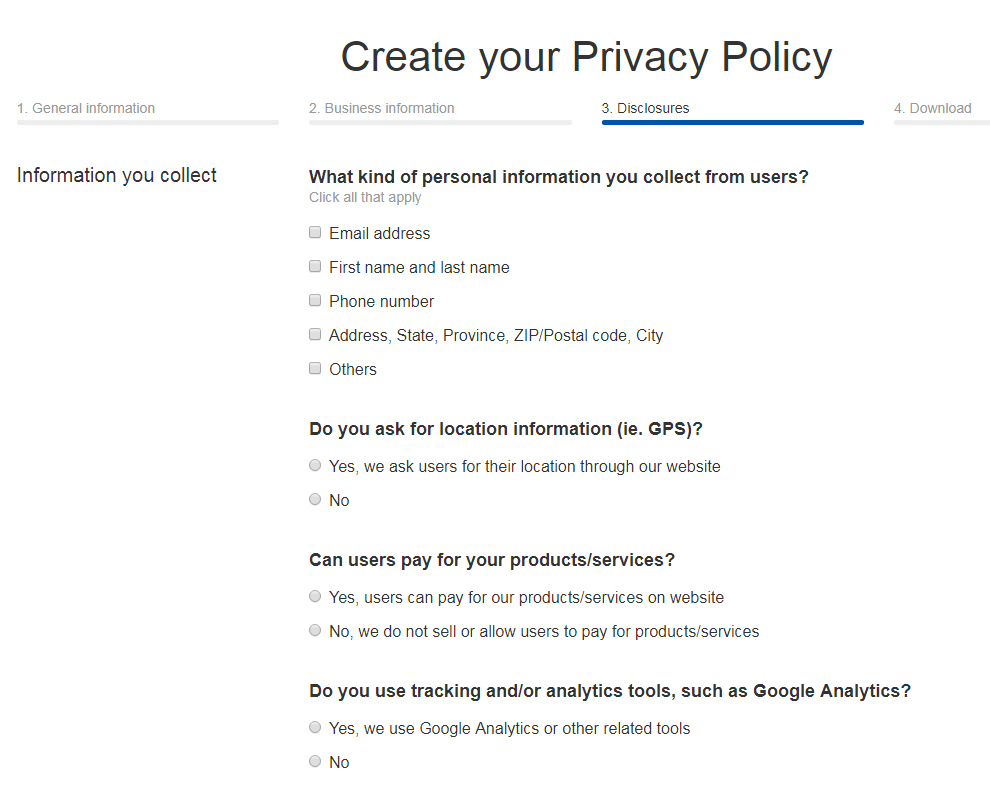

- Answer the questions about your business practices and click "Next step" when finished:

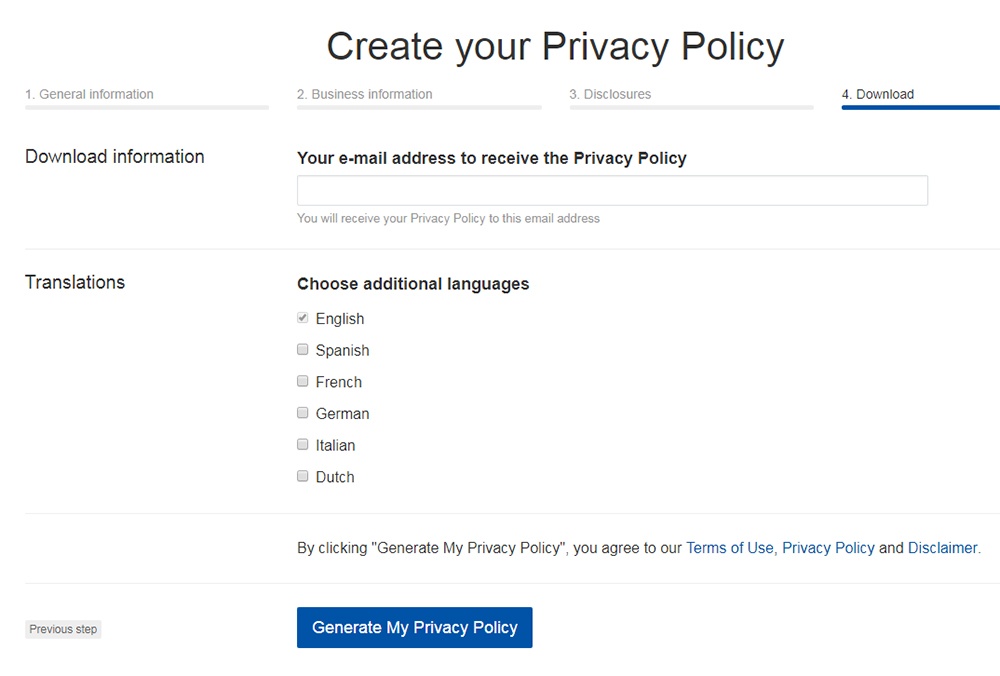

- Enter your email address where you'd like your policy sent, select translation versions and click "Generate My Privacy Policy." You'll be able to instantly access and download your new Privacy Policy:

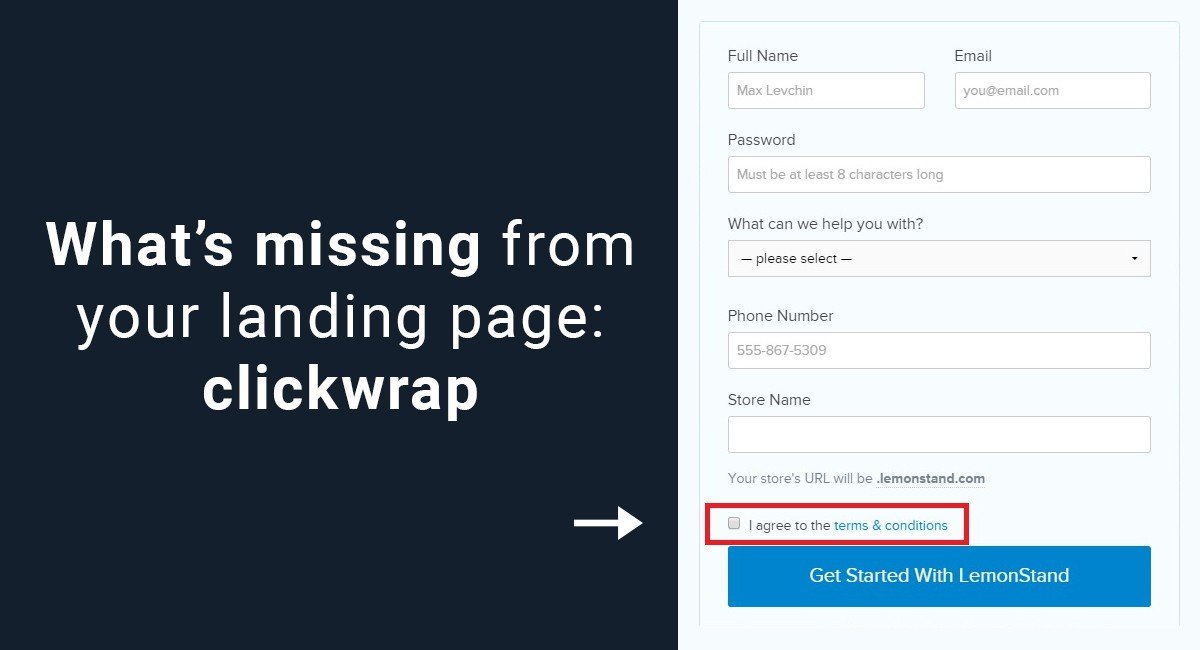

Obtaining Your Users' Consent

The expansion of voice assistant technology means that people are increasingly comfortable with the potential privacy implications. However, the fact remains that recording a person's voice and transmitting it to a third party is potentially very intrusive.

Certain privacy laws, most notably the GDPR, require companies to request consent from their customers before they use their personal information in particular ways.

Requesting consent is most commonly associated with email marketing, but it can be relevant to any situation where you wish to collect or use someone's personal data in a way that they might not reasonably expect.

So, getting consent for the recording and transmitting of voice commands is a legal requirement in certain circumstances. This will depend on the laws of the countries in which your users are based.

But, just like with transparency, this isn't where your obligations end. Obtaining consent is also a requirement under the Terms and Conditions of many third-party companies.

Let's look at the requirements for obtaining user consent set out by Apple and Google. Other voice assistant companies also have their own rules around this.

Apple

Developers can use Apple's Sirikit to allow their apps to work with Siri. But before your app can communicate with Sirikit via a user's device, that user must grant their permission for this.

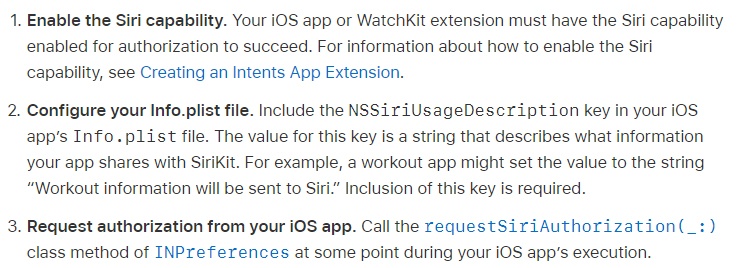

This is a three-step process, as described by Apple in its article on Requesting Authorization to Use Sirikit:

As noted above, Google requires that developers of Actions for Google Assistant create a Privacy Policy. But because Actions are executed within Google Assistant itself, Google will take care of obtaining consent via its own app.

It's worth noting, however, that Google has some strict requirements about how developers use their apps to interact with users' devices. If you want to use any sort of voice interaction or activation in your app, you'll need to request permission to access the users' microphone.

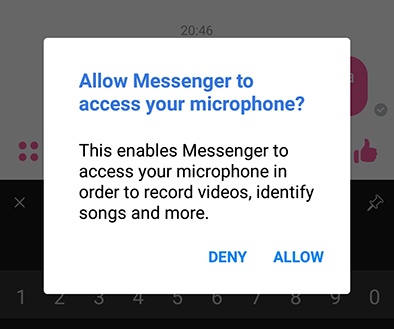

Accessing a user's microphone is classed as a "dangerous permission." Dangerous permissions require user consent at runtime. Google provides extensive guidance on how users can request access permissions. Permission requests must be accompanied by information about why access is required.

Here's how this looks in the Facebook Messenger Android app:

Your app should only request the permissions that it needs in connection with a specific purpose.

Summary

There are obvious privacy issues associated with the use of voice assistants. The main burden of protecting consumer privacy falls on companies such as Google, Amazon, and Samsung (to name but a few) that provide the voice assistant software itself. But developers of products and services that interact with this software have their part to play, too.

If you're developing an app or product that uses voice assistant software, take this three-stage approach to protect your users' privacy:

- Understand your obligations under the law and under your agreements with third parties

- Be transparent with your users by creating a Privacy Policy

- Earn consent before your app or device collects user data and sends it to a third party

Comprehensive compliance starts with a Privacy Policy.

Comply with the law with our agreements, policies, and consent banners. Everything is included.