Terms & Conditions are vital for any web page or mobile app, but when you encourage user-generated content, the importance of this agreement intensifies.

Here is what you must consider when drafting Terms & Conditions for a website or an app that has user-generated content.

Why Terms & Conditions

The Terms & Conditions agreement (T&C) is also called "Terms of Use" or "Terms of Service."

This agreement sets out rules for using a website or an app. If users fail to follow these rules, it gives developers the right to terminate their accounts.

User-Generated Content presents unique problems because users have more discretion and many developers do not pre-screen the content.

In this environment, it's easy to create a culture of abuse, copyright infringement, and objectionable content.

This makes the Terms & Conditions vital when it comes to any app or website that allows user-generated content. Developers need a way to police content and at least a general Termination clause so they can enforce rules through content removal or banning users.

A Terms & Conditions is not required under law like a Privacy Policy. However, it's highly recommended as a good business practice so that you can maintain the integrity of your website and app and the quality of your online communities.

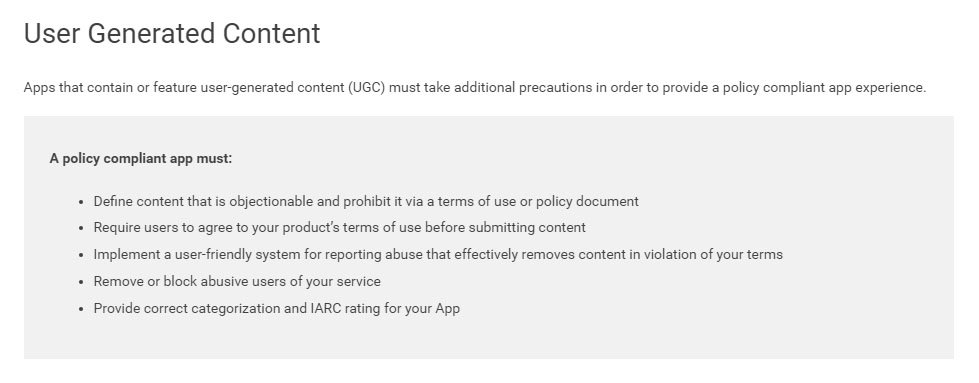

Also, third parties who host apps, like Apple App Store and Google Play, require control and removal of objectionable content in their service agreements.

Otherwise, developers cannot sell apps through these app stores. Anyone using these platforms must have a policy that allows for monitoring and reporting of objectionable content.

User-Generated Content

User-generated content covers a wide umbrella of material: blogs, wikis, discussion posts, chats, tweets, podcasts, digital images, video, audio files, advertisements, and any other form of media generated and posted by users fall under "user-generated content."

An open online environment is a double-edged sword. It inspires creativity and allows people to share material all over the world. Unfortunately, it also raises the possibility of objectionable content, abuse, and copyright infringement.

This is how your Terms & Conditions can address these issues.

Objectionable Content

Objectionable content and how to handle it is a big issue with user-generated content.

Google Play and the Apple App Store are committed to preserving their respectable environments so they require all developers using their platform to have policies for handling this content put in place.

Google Play has a long developer policy that reads like a plain language Terms & Conditions. It offers a long list of restricted content categories including sexually explicit, child endangerment, violence, harassment and abuse, hate speech, and encouraging illegal activities.

To avoid violating these policies, Google Play requires this of its developers:

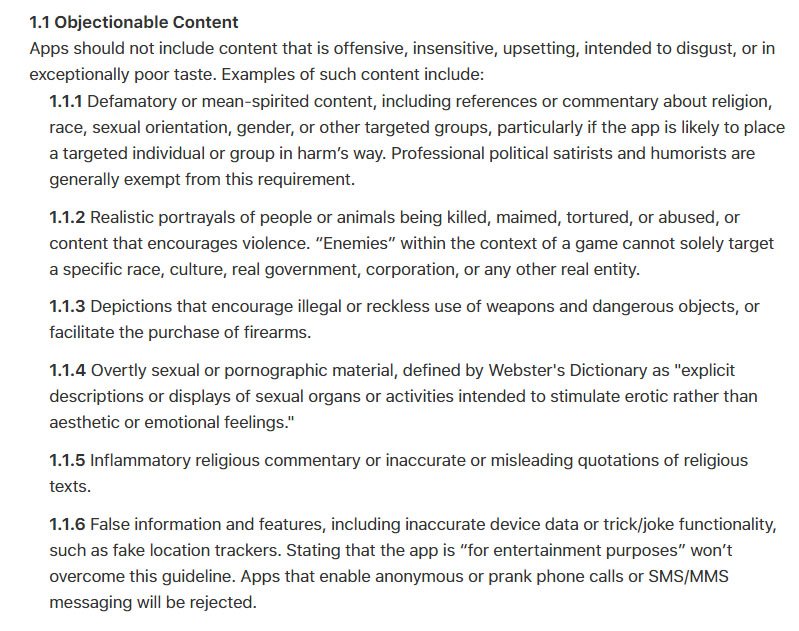

The Apple App Store offers similar guidelines but presents them in a less dense format. It starts by defining objectionable content:

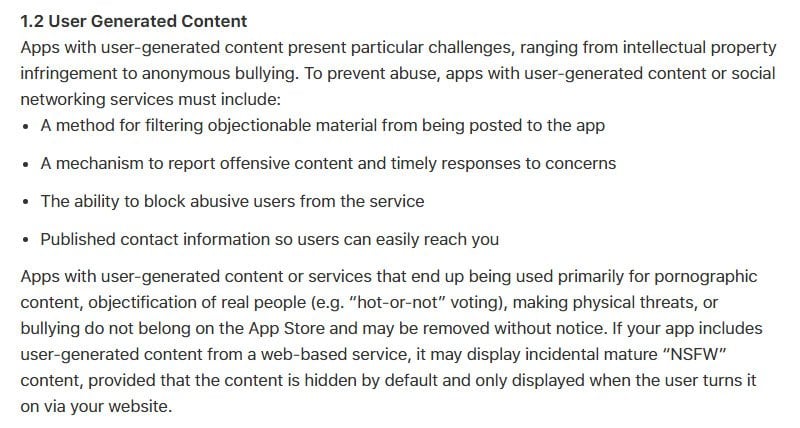

Once making prohibitions clear, it presents Apple's policy on user-generated content:

Both Apple and Google encourage developers to monitor content and in absence of that, allow reporting and enforcement. Basically, in the very least, they require the Termination clause that removes offending users and content.

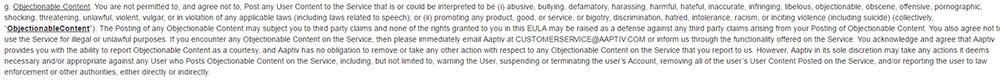

Aaptiv, a personal fitness app that includes photo share and discussion boards, provides a good example of how to appropriately address objectionable content.

Presented in its End-User License Agreement and Terms of Service agreement (a combined agreement), there's no doubt what is prohibited. Aaptiv's agreement describes objectionable content and provides an email address where users can submit reports:

Many apps and websites are less direct about objectionable content. If your app has a high risk of attracting it, take the approach of Aaptiv so there's no doubt that you're in compliance with App Store or Google Play policy.

Harassment and Abuse

There's always a chance of abusive behavior when you host an online community that allows user-generated content.

This has become a hot issue and repeated incidents can bring bad publicity to your website/app. Also, in some cases, such as with hate speech or stalking, you could face legal liability.

This type of abusive behavior falls under objectionable content but frequently requires a specific mention.

Apple specifically mentions "bullying" when it address user-generated content in its policy. It acknowledges this is a potential problem before going into its monitoring requirements for developers:

Google Play's list of prohibited material addresses harassment directly:

Harassment may be addressed in an objectionable content provision, like with Aaptiv. In many cases, it's listed under the "User Conduct" section as a prohibited activity.

500px, a photo-sharing platform and marketplace, takes that approach and specifically mentions harassment:

If there's a high possibility of people using your app to harass others, this requires specific mention, maybe even its own reporting process.

Intellectual Property Infringement

The Digital Millennium Copyright Act allows website owners to be held liable if their services enable copyright violations. You can join the safe harbor and avoid liability if you provide a reporting process for these violations.

The typical report comes from a third party who discovers their copyrighted material on your site. While there may be a page that supports reporting the incident, the procedure is also present in the Terms & Conditions agreement - just to be safe.

Sometimes the Terms & Conditions provision is brief with guidance to the DMCA page.

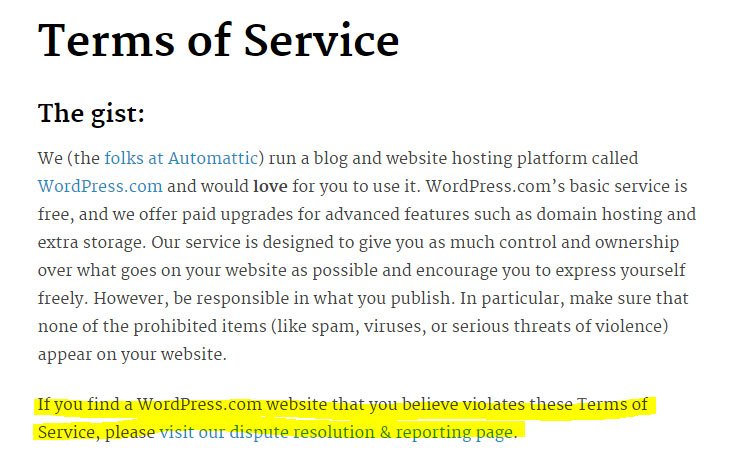

WordPress, a blogging platform run by Automattic, takes this approach:

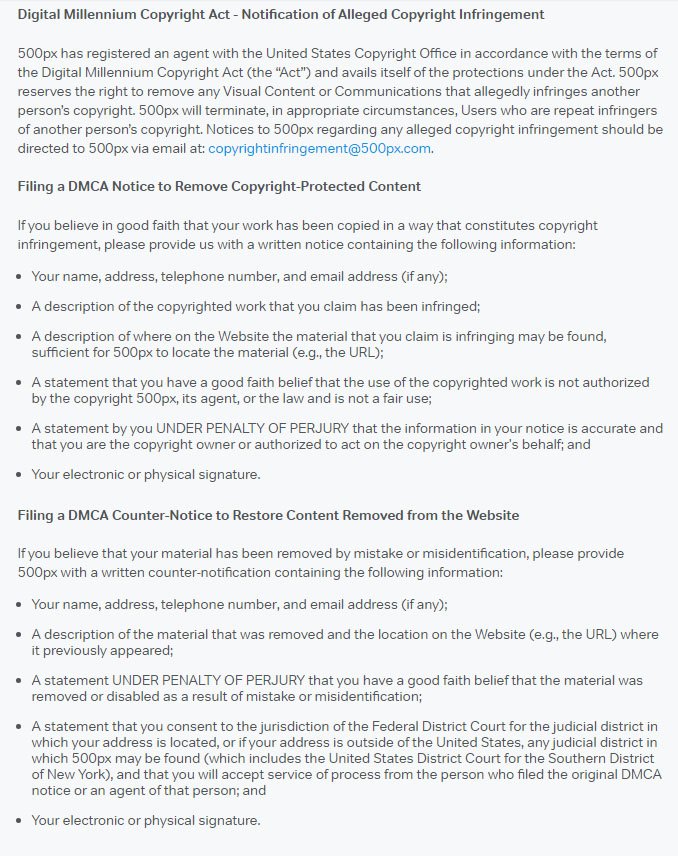

500px, with its wide sharing platform, also produces opportunities for copyright infringement because of its content created by users.

As expected, its DMCA provisions are long and detailed to assure these incidents are addressed. There is also a counter-notice provision in case the user does not believe they infringed:

Copyright infringement is not an issue to leave to general references. Even if all you do is host discussion boards, there's still a chance of users appropriating copyrighted material.

Provide a DMCA process anytime you encourage users to create content.

Addressing Developer Responsibility

Even with their strict policies, Apple and Google Play recognize that it's impossible to pre-screen all user-generated content.

Social media users on Facebook and Twitter produce millions of content items every day, if not billions.

That is why a report function must be available.

Before something is removed or a user banned, there's still a chance that other users will see the objectionable material.

To address this, developers take a warning and waiver approach. They are willing to give users a heads-up on this possibility but they also do not assume liability for these instances.

This is not treated as a Liability Limitation clause or a Waiver clause, but often given its own heading in the Terms & Conditions agreement.

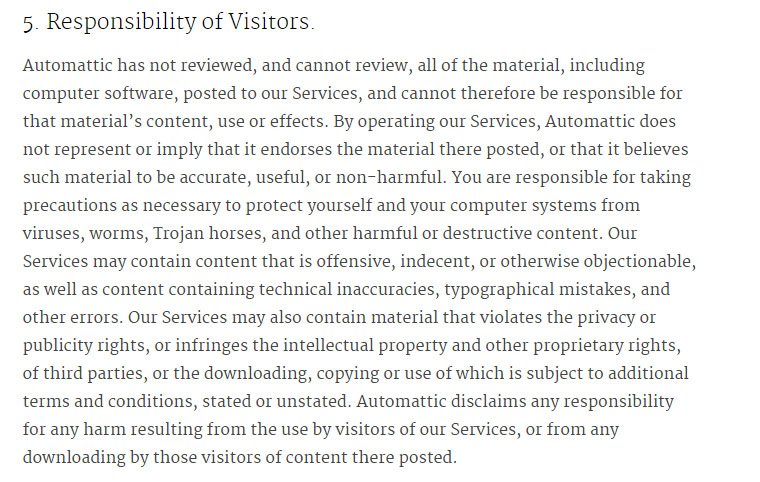

One example of this approach is WordPress. It mentions the impossibility of viewing everything before it posts:

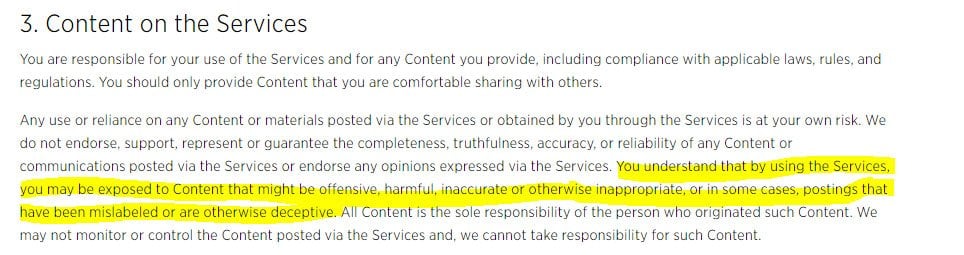

Twitter is also in this impossible situation since tweets post by the thousands every hour. It takes the same warning and waiver approach with a similar heading:

This may appear like it is abdicating responsibility that may put both apps in violation of Google Play or Apple policies. The reason neither service is in violation of those terms is because they grant themselves wide discretion to ban users and cancel accounts.

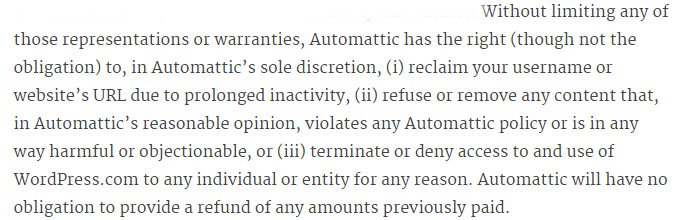

WordPress makes it clear it can remove material deemed objectionable, even though it never defines content falling under that. This gives it discretion to consider complaints on a case-by-case basis:

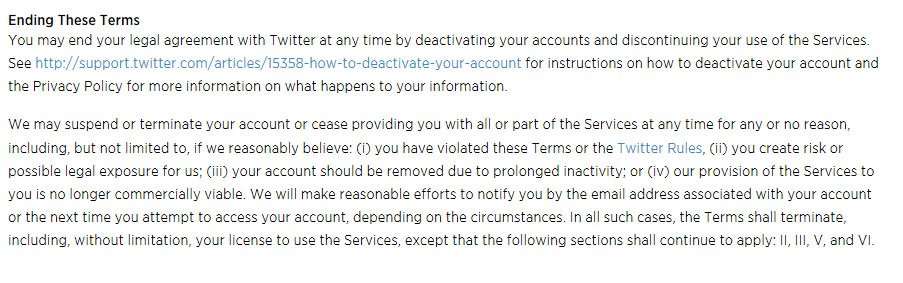

Twitter's Terms of Service agreement contains a Termination clause with the same flexibility:

Deciding to only remove material or ban users on a case-by-case basis is a legitimate decision.

However, you must take steps beyond just stating it in your Terms & Conditions. That is where a "Report" button comes in.

The "Report" Button

A "Report" button in a conspicuous place is the best way to address all these challenges. That includes copyright infringement, harassment, and objectionable content.

Since it remains technologically impossible to monitor all user-generated content, the least you can do is make reporting easy for users.

Also, as noted in Google Play and Apple policies, a reporting process is required. The trick is to choose a user-friendly option.

Some platforms provide a link to report content items in their Terms & Conditions. This is an example from WordPress, which offers a dispute resolution and reporting page:

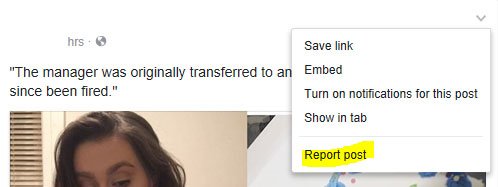

Social media apps allow users to report specific posts and pages. This is often provided in a drop-down menu on the post.

This is how Facebook provides a "report" button on specific posts:

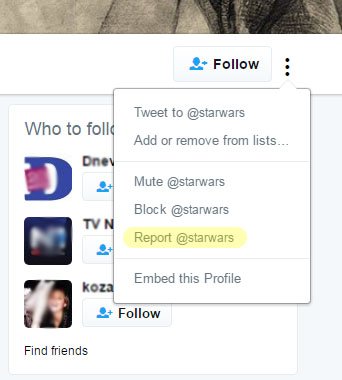

Twitter takes a similar approach:

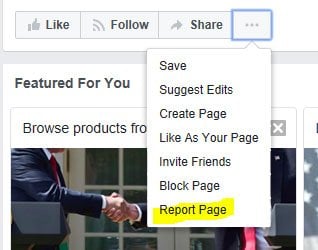

Depending on your website or app, there's also a possibility of an entire page promoting objectionable content. It would be impractical and burdensome to require users to report every post or tweet from a page.

Twitter provides the opportunity to report a user right next to the "Follow" button:

You can do the same with Facebook, but for Facebook pages:

In addition to providing the opportunity to report, you must have an in-house process for review. Designated departments or email addresses are the best way to do this.

Allowing user-generated content often creates a marketplace of ideas. But it also contains landmines in the form of objectionable content, the possibility of harassment, and copyright infringement.

Your Terms & Conditions not only needs to state the rules so users have standards of conduct, but must also give you the grounds to take enforcement action.

A reporting function empowers users and is another tool in creating a functional online community.

Comprehensive compliance starts with a Privacy Policy.

Comply with the law with our agreements, policies, and consent banners. Everything is included.